Split Testing Guide: Master Effective Experiments in 2026

-

Date Published15 December 2025

In 2026, digital marketers and business owners face more competition than ever. Data-driven decision-making is now essential for anyone who wants to stay ahead and grow their business.

Mastering split testing gives you a powerful edge. It lets you compare versions of your site, ads, or user journeys, so you can stop guessing and start making changes that actually increase conversions, save budget, and create better customer experiences.

This guide shows you exactly how to master split testing in 2026. We’ll cover the fundamentals, explain the difference from A/B testing, reveal the business value, walk through the step-by-step process with examples, dive into statistical models, help you choose the right tools, and explore future trends.

Split testing is the backbone of data-driven marketing. If you've ever wondered why some websites seem to convert visitors into customers effortlessly, split testing is usually the secret sauce behind the scenes.

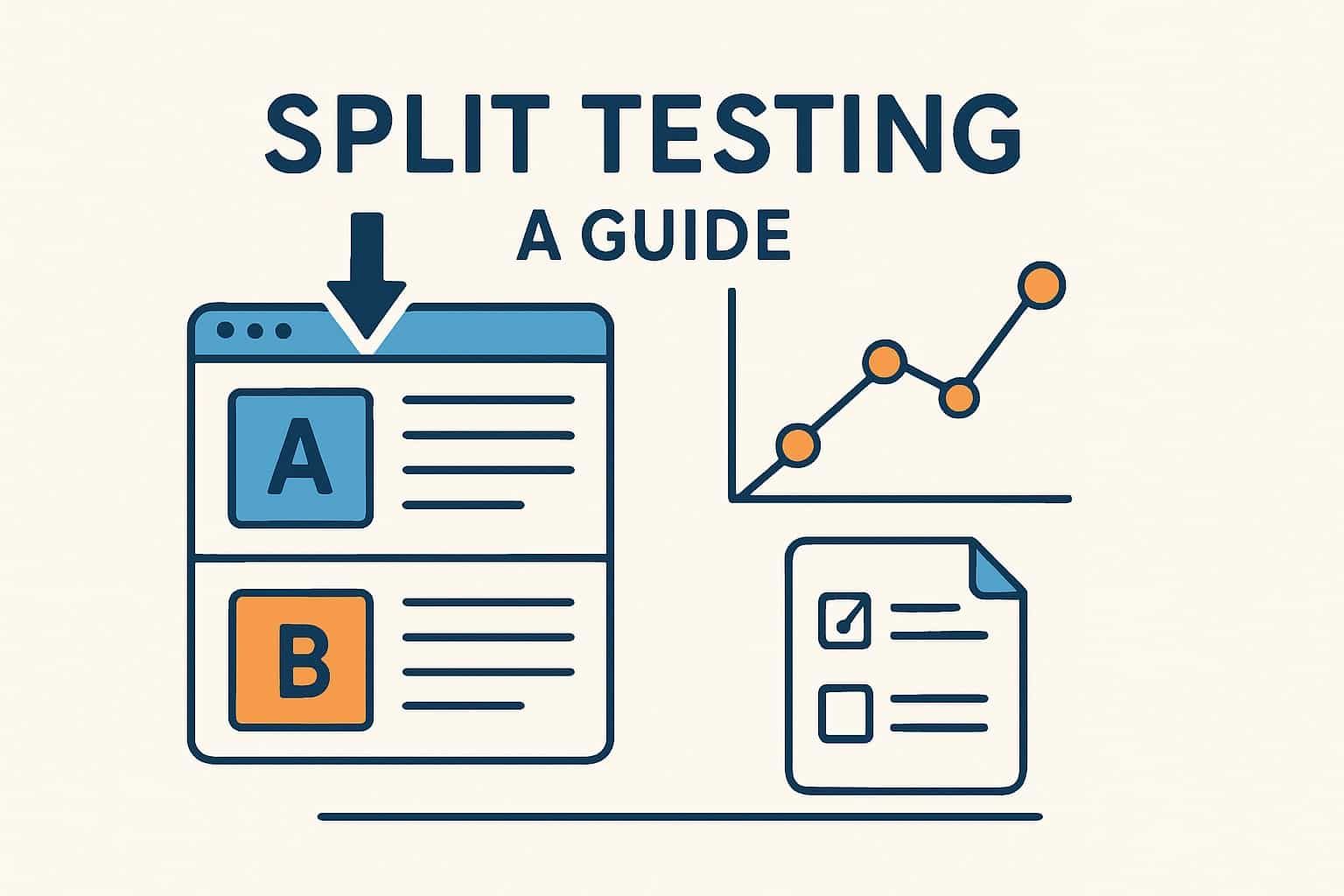

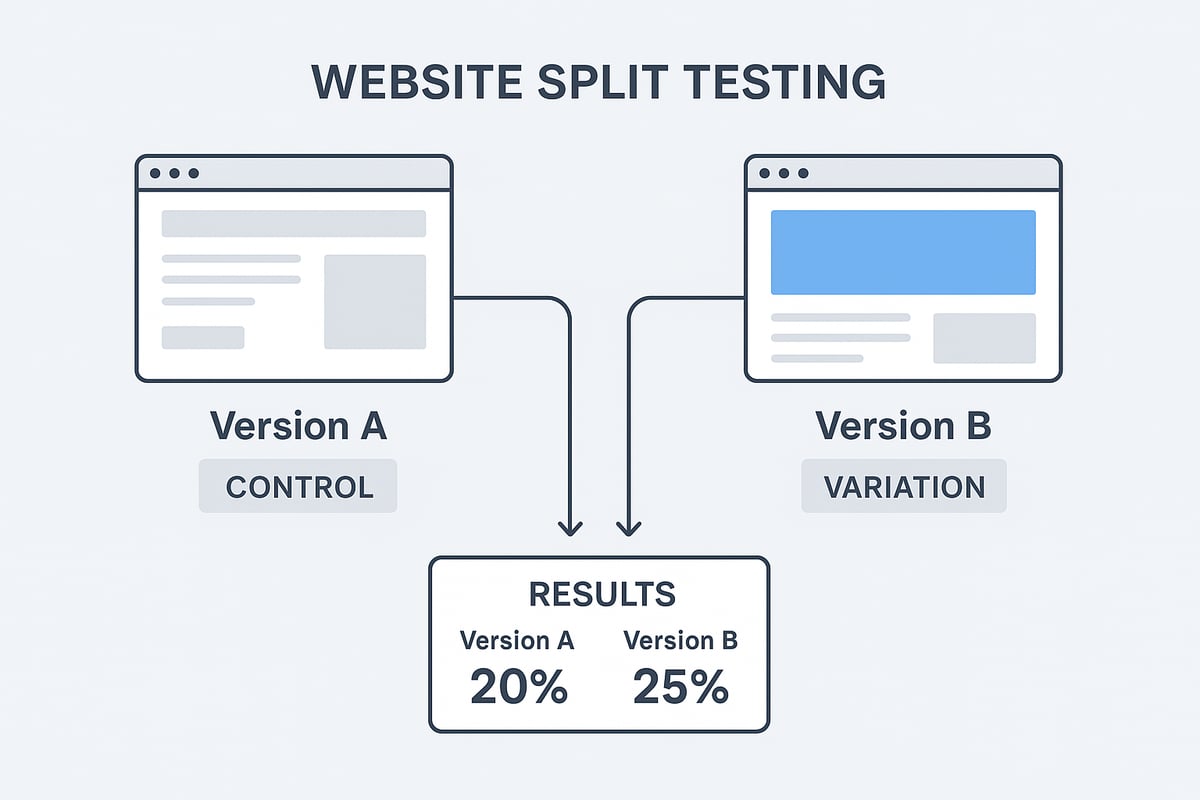

Split testing is a controlled experiment where you randomly show users different versions of a web page, ad, or email to see which performs best. You might hear terms like split testing, A/B testing, or multivariate testing used together, but they have subtle differences.

A/B testing usually compares just two versions, A and B, while split testing can include more variations or even radically different designs. Multivariate testing goes further, testing combinations of several elements at once.

Picture a chocolate ecommerce site testing two banner offers: one with "Free Delivery Over £20" and another with "10% Off First Order." Each visitor sees only one version, unaware they're part of a test. The original is called the control, while the new version is the variation.

Common use cases include landing pages, checkout flows, email campaigns, and ad creatives. Split testing is foundational to conversion rate optimisation strategies, helping you move from guesswork to growth.

You might hear marketers use split testing and A/B testing as if they mean the same thing. Technically, A/B testing is about comparing just two versions: A and B. Split testing focuses on splitting your traffic between multiple versions, whether that's two, three, or more.

Multivariate testing is a step up, letting you test multiple elements—like headline, image, and button text—at the same time. According to Contentsquare, split testing often means comparing completely different layouts, not just small tweaks.

So, when should you use each? Use A/B testing for quick, focused changes, like CTA button colour. Use split testing if you're evaluating big design overhauls. Multivariate testing helps when you want to understand how different elements interact. All of them aim to find the variant that drives the most conversions.

Relying on gut instinct or “best practices” often leads to missed opportunities. Split testing lets you make decisions based on real user behaviour, not the HiPPO (Highest Paid Person’s Opinion) or industry trends that may not fit your audience.

Businesses see measurable gains: higher conversion rates, increased revenue, and lower bounce rates. Take Ben.nl, who boosted conversions by 17.63% just by adjusting where the colour palette appeared on product pages. Even seasoned marketers get it wrong sometimes—split testing lets your audience “vote” with their actions.

In today’s competitive digital landscape, split testing is a proven driver of business growth. It’s how leaders stay ahead.

Think split testing is only for big brands or high-traffic sites? Think again. Any business, regardless of size, can benefit. Another myth: it’s about throwing random ideas at the wall. In reality, effective split testing starts with evidence-based hypotheses and a clear plan.

Some believe split testing is the entire CRO process. Not true—it’s a key tool, but real optimisation involves research, analysis, and ongoing iteration. As Contentsquare points out, split testing is about validating ideas, not just discovering them.

Bottom line: split testing is scalable, structured, and universally applicable across industries.

Companies that embrace split testing consistently report better conversion rates and higher revenue. Small changes, such as tweaking a CTA colour or image placement, can lead to impressive results.

Industry benchmarks from platforms like VWO and Contentsquare show that split testing works for ecommerce, SaaS, B2B, and service businesses alike. It’s a staple for anyone serious about digital growth.

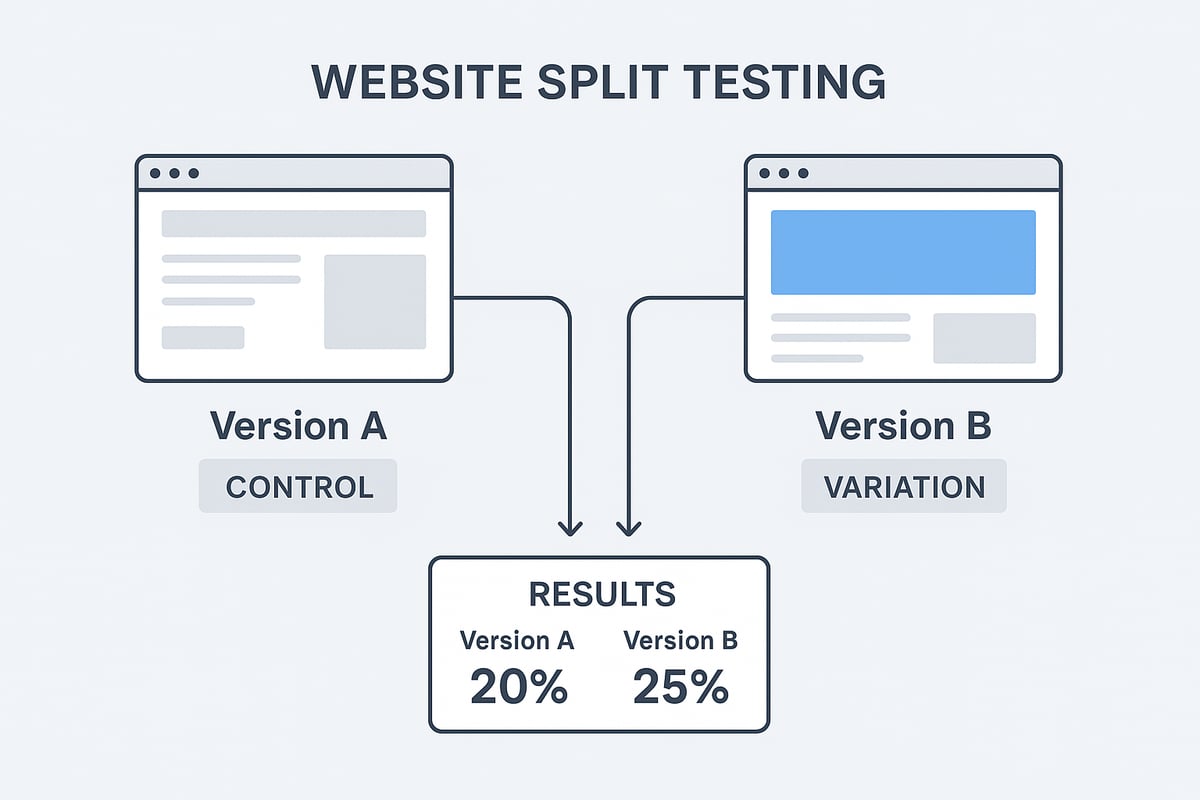

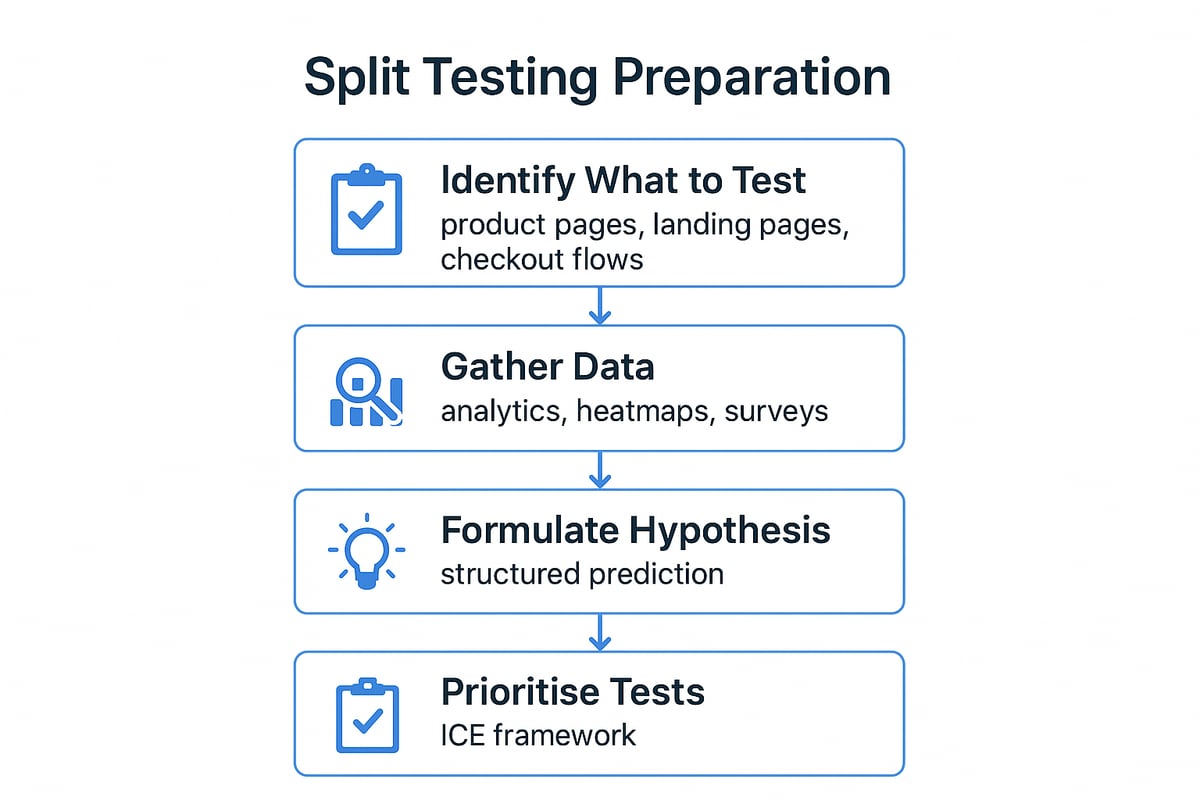

Getting your split testing process right before you start is where the real magic happens. A little planning upfront saves you wasted time and delivers results that move the needle.

Start by pinpointing where split testing will have the greatest impact. Focus on high-value pages like product pages, landing pages, and checkout flows. These are the spots where even small tweaks can lead to a surge in conversions.

Look for elements that directly influence user action. This could be headlines, call-to-action buttons, images, forms, testimonials, or even entire layouts. For inspiration on where to start, check out these Landing page optimisation tips.

Use analytics to spot bottlenecks and high-exit pages. Prioritise elements that influence your main metrics, whether that’s sales, leads, or signups. Remember, split testing is most powerful when it targets areas that drive your business goals.

Effective split testing relies on more than guesswork. Use quantitative data from sources like Google Analytics, heatmaps, and funnel analysis to see where users drop off. Pair this with qualitative insights from customer feedback, surveys, or session replays.

Heatmaps, for example, can show you exactly where attention fades or confusion sets in. Funnel analysis pinpoints stages where users abandon ship. By combining numbers with real user stories, you get a complete picture.

The goal is to base every split testing idea on solid data, not assumptions. Identify recurring patterns and friction points, then use these insights to uncover your biggest opportunities for improvement.

A strong split testing hypothesis is the backbone of any successful experiment. Don’t just test random ideas. Instead, create a clear, evidence-based prediction: “If we change X, we expect Y to happen because of Z.”

Use a framework to keep it structured. Rate your hypothesis by potential impact, confidence, and how easy it is to implement. For example, if data shows users ignore your signup button, you might predict that moving it higher will increase clicks.

Avoid the trap of testing for the sake of it. Focus on changes that are likely to shift the needle on your key metrics. Structured hypotheses ensure your split testing isn’t just busywork, but a strategic lever for growth.

With a list of hypotheses in hand, it’s time to prioritise. Score each idea using frameworks like ICE (Impact, Confidence, Ease) or PIE (Potential, Importance, Ease). This helps you work smarter, not harder.

Start with “quick wins” that are simple to implement and likely to show results fast. Plan to test one variable at a time so you can be confident about what caused any change.

Make sure your split testing roadmap is always tied back to business priorities. For example, testing a checkout tweak usually beats fiddling with the footer. Iterative, focused testing builds momentum and delivers compounding gains over time.

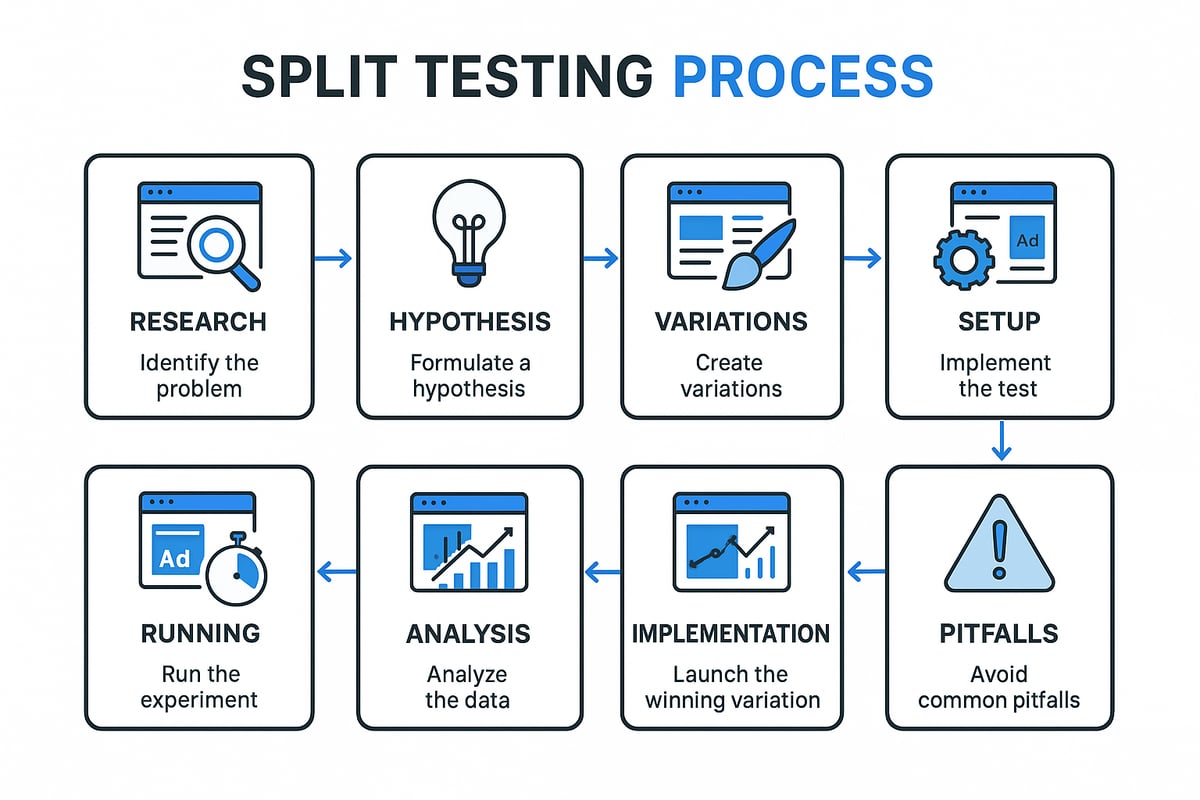

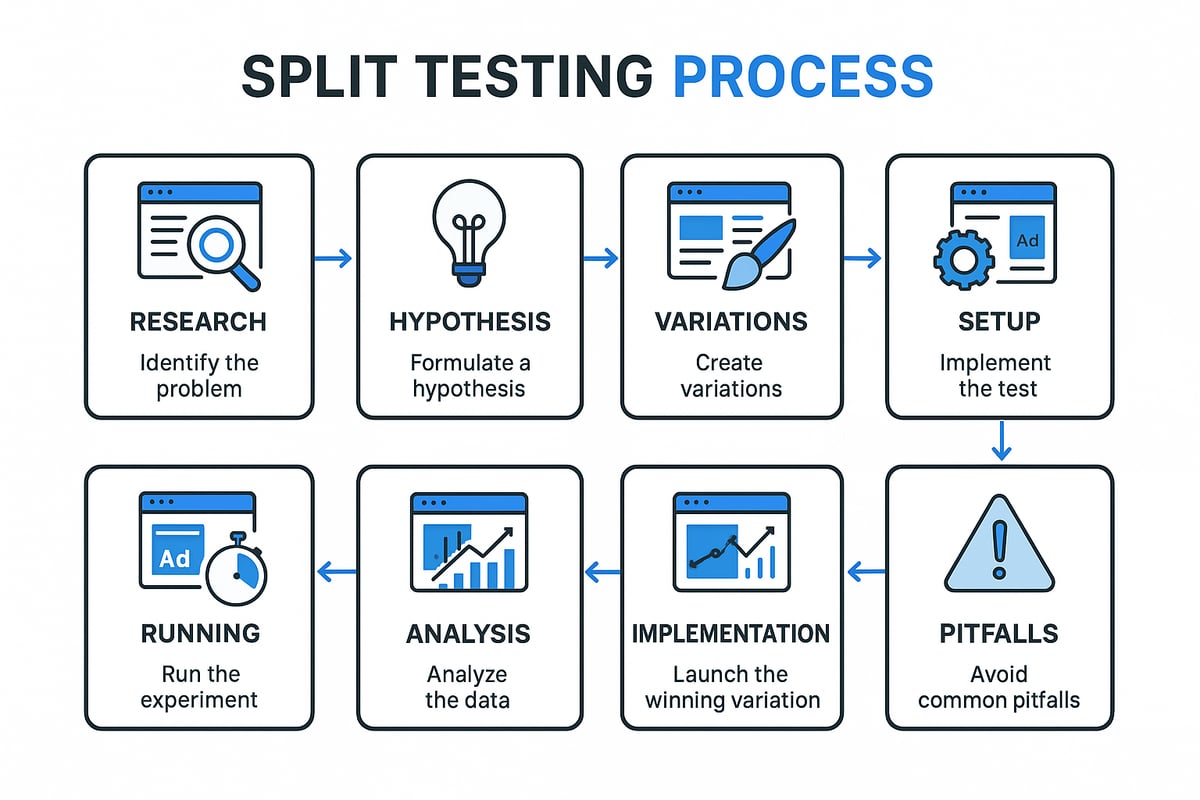

Running a successful split testing experiment in 2026 means following a clear, evidence-based process. Each step builds on the last, ensuring you move from strategy to execution with confidence. Let’s break down exactly how to do it.

Begin every split testing journey with deep research. Gather baseline data using analytics tools like Google Analytics, heatmaps, and funnel analysis. Pinpoint where users drop off or fail to convert. For example, Contentsquare’s funnel analysis can reveal friction points in your checkout process.

Pair this quantitative data with qualitative insights. Use customer polls, surveys, or session replays to hear the voice of your users. Look for recurring complaints or questions—these are goldmines for improvement ideas.

Set a clear objective for your test. For instance, aim to increase signups by 10 percent or reduce cart abandonment by 15 percent. This focus ensures your split testing efforts drive business value.

Turn your research into actionable hypotheses. In split testing, a hypothesis is a prediction, grounded in data, about how a change will impact user behaviour. Use a framework like: “If we change X, we expect Y because of Z.”

Back up your ideas with real evidence—don’t guess. For example, if analytics show users miss your CTA below the fold, your hypothesis might be: “Moving the CTA higher will boost clicks because more users will see it.”

Align each hypothesis with a business KPI, such as conversions or average order value. Prioritise ideas that are likely to move the needle in your split testing programme.

With your hypothesis in hand, design your test variations. In split testing, the control is your current version, while the variation is the new one you want to try. Use visual editors or work with developers for more complex changes.

Change only one variable at a time for clarity. For example:

Test both small tweaks and bold redesigns, but always maintain a consistent user experience. Make sure your variations align with your brand and don’t disrupt user trust.

Now, set up your split testing experiment in your chosen platform—VWO, Contentsquare, or Optimizely are popular options. Decide how to split your traffic, usually 50/50 between control and variation.

Configure your test parameters:

Ensure randomisation so users only see one version. Keep documentation of test settings for transparency. For a deep dive on setup and best practices, see Split Test Best Practices.

Once live, monitor your split testing experiment closely. Watch for technical issues, broken links, or slow load times. Let the test run its full course—don’t stop early, or you risk unreliable results.

Check in periodically to ensure there are no anomalies, but avoid making changes mid-test. VWO recommends at least a two-week run, even for high-traffic sites.

Record interim data, but wait for the test to finish before deciding on a winner. Patience pays off in split testing, ensuring your conclusions are sound.

Once your split testing period ends, dive into the data. Use your tool’s reporting dashboard to compare conversion rates, click-throughs, or revenue between control and variation.

Assess whether the difference is statistically significant. Did the new version deliver a real uplift, or was it just random chance? For example, Ben.nl’s layout tweak led to a validated 17.63 percent conversion increase.

Document all findings, even if the test “failed.” Every split testing experiment offers valuable insights that inform your next move.

If your variation wins, roll it out to all users and watch post-launch metrics. Confirm the improvement is sustained in real-world use.

Use what you’ve learned to fuel your next round of split testing. Build a roadmap of future experiments, targeting new bottlenecks or opportunities.

Share results with your team to foster a culture of learning. Remember, continuous optimisation through split testing leads to lasting gains.

Even experienced marketers can trip up in split testing. Avoid these classic mistakes:

Always QA each variation thoroughly before launch. Use audience segmentation to focus on the right users. Embrace every result as a learning opportunity.

Split testing is not a one-off project—it’s an ongoing process that, when done right, can transform your digital performance.

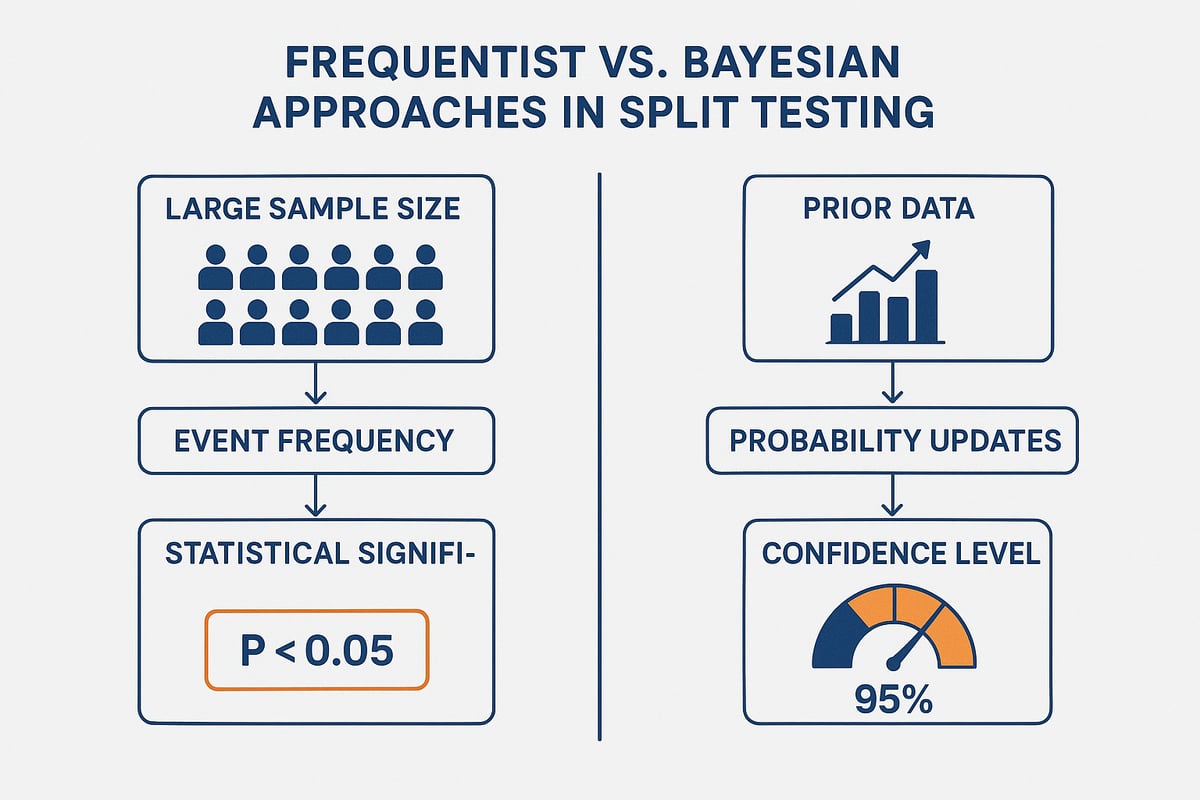

Understanding statistical models is crucial if you want to run split testing that delivers real, actionable results. The models you choose will shape how you interpret your data, how quickly you reach conclusions, and how confident you can be in your findings. Let’s break down the essentials.

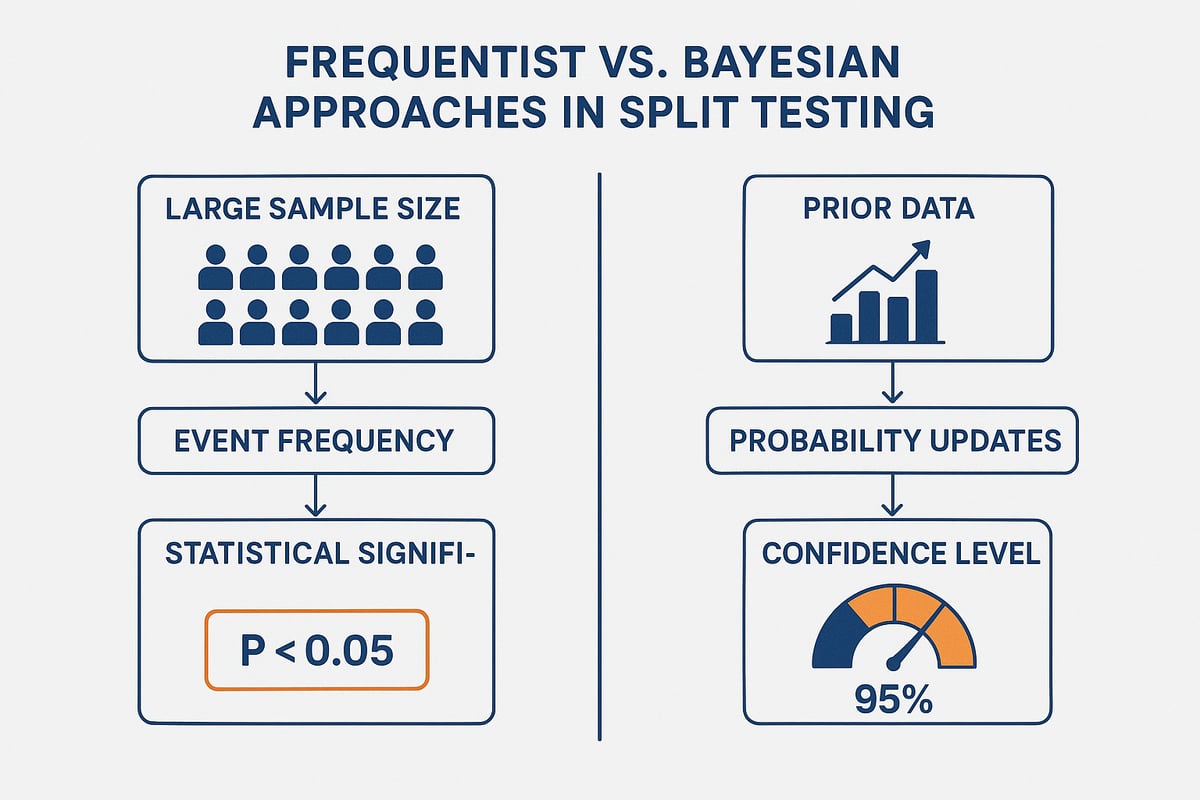

When running split testing, you’ll often hear about Frequentist and Bayesian statistics. The Frequentist model is the traditional choice, relying on event frequency in large samples to draw conclusions. This means you need a lot of data before making decisions. On the other hand, Bayesian methods use probability and prior results, offering insights faster, especially for sites with less traffic.

Here’s a quick comparison:

| Model | Pros | Cons |

|---|---|---|

| Frequentist | Robust, well-known, widely accepted | Slower, needs more data |

| Bayesian | Faster, works with smaller samples | Needs careful prior selection |

Some recent research, like the Learning Across Experiments and Time: Tackling Heterogeneity in A/B Testing paper, explores how new frameworks can make split testing more reliable, even with practical constraints. Ultimately, your choice depends on your traffic, timeline, and risk tolerance.

Statistical significance is the bedrock of trustworthy split testing. It tells you whether your results are likely to be real or just due to chance. Most marketers aim for a 95% confidence level, meaning there’s only a 5% probability the results happened randomly.

To ensure significance:

If you stop a split testing experiment too early, you risk making decisions on incomplete data. Patience is rewarded here, as only properly run tests will give you the confidence to act.

Once your split testing experiment is complete, it’s time to dig into the numbers. Start by comparing key metrics like conversion rates, click-throughs, and revenue per visitor. Look for clear winners, but also check if the uplift is meaningful for your business goals.

Document both successful and unsuccessful outcomes. For example, a layout tweak that increases conversions by 17% is worth rolling out, but even a flat result provides valuable learning. Present findings in a way that non-technical stakeholders can understand, using charts or simple tables.

Every split testing result, positive or negative, should feed into your ongoing optimisation roadmap.

Not every split testing experiment will produce a clear winner. Sometimes results are inconclusive or even negative. That’s not a failure—it’s part of building a knowledge base. Use these outcomes to refine your hypotheses and improve future experiments.

Create a log of all tests, noting what was tried, what worked, and what didn’t. This habit encourages a culture of experimentation and learning across your team. Remember, continuous split testing—even when the result is “no impact”—drives incremental gains over time.

The best marketers treat every test as a stepping stone towards better business performance.

Choosing the right split testing tools is critical for any marketer or business aiming to boost conversions in 2026. With the sheer variety of platforms and features on offer, knowing what to prioritise can save you time, money, and headaches.

When comparing split testing platforms, focus on features that make experimentation efficient and insightful. Look for intuitive visual editors that allow you to set up tests without heavy developer involvement. The ability to run multiple experiments in parallel on isolated audiences is a must for scaling your optimisation efforts.

Seamless integration with analytics platforms, heatmaps, and session replay tools gives you a complete view of user behaviour. Fast and accurate reporting dashboards help you make decisions quickly. AI-driven insights, like those discussed in AI website optimization insights, are becoming standard, making it easier to spot winning variations and automate routine tasks.

Choose tools that fit your team’s skillset and business goals. Prioritise platforms that support mobile and desktop testing, and provide robust segmentation options.

The split testing market is evolving rapidly. Leading platforms such as VWO, Contentsquare, Optimizely, Google Optimize (if still available), and Convert all offer robust testing features, but they differ in pricing, usability, and support.

Here’s a quick comparison:

| Platform | Visual Editor | AI Features | Multivariate | Mobile Support | Free Trial |

|---|---|---|---|---|---|

| VWO | Yes | Yes | Yes | Yes | Yes |

| Contentsquare | Yes | Yes | Yes | Yes | No |

| Optimizely | Yes | Yes | Yes | Yes | No |

| Google Optimize | Yes | Limited | Yes | Yes | Yes |

| Convert | Yes | Limited | Yes | Yes | Yes |

According to the Split Testing Software Market Size, Growth, Share, & Analysis Report – 2033, demand for AI-powered and privacy-friendly split testing tools is set to surge. Consider factors like ease of setup, customer support, and advanced targeting when making your choice.

Getting the most from your split testing tool means setting up every experiment with care. Start by ensuring proper tracking and analytics integration, so every user action is captured accurately. Always run thorough QA on all variations, across devices and browsers, before going live.

Train your team on how to use the platform and design effective tests. Use clear documentation and consistent naming conventions to avoid confusion. Monitor tests for technical glitches and review your testing processes regularly to adapt to new business needs.

A well-structured approach keeps your split testing experiments reliable and your results actionable.

For ecommerce brands, especially those on Shopify, split testing is a game-changer. Marketing XP specialises in designing and running split testing experiments that focus on real-world impact, not just theory.

By combining CRO best practices, analytics, and AI-powered insights, Marketing XP helps businesses optimise product pages, checkout flows, and user journeys for maximum conversion. Their hands-on, practical approach ensures every test drives measurable results.

Business owners can also access training and coaching to run their own split testing campaigns, leveraging AI tools for ongoing improvement. If you want specialist support and proven strategies for Shopify split testing, Marketing XP is ready to help you make every visitor count.

The world of split testing is evolving at breakneck speed. As we look to 2026, digital marketers and business owners will need to adapt to new technologies and shifting user expectations. Mastering these innovations will ensure your split testing strategy remains ahead of the curve.

AI is reshaping how split testing is done. Modern platforms now offer automated test creation, predictive analytics, and real-time data analysis. With tools like Contentsquare’s Sense Analyst, marketers can spot high-impact opportunities with minimal manual effort.

Automated segmentation enables you to run targeted experiments for specific user groups. AI-driven insights help you refine your split testing hypotheses and interpret results more quickly. For those interested in leveraging advanced analytics, Data analytics for marketers offers practical guidance on using AI and data to inform every test.

The result? Marketers spend less time on number crunching and more time crafting winning strategies.

Split testing is no longer just about static A/B comparisons. The future lies in hyper-personalised, dynamic testing that adapts in real time to each user. Platforms can now serve multiple variations based on user behaviour, location, or device.

Imagine split testing product recommendations or messaging tailored to returning customers versus first-time visitors. This level of personalisation increases engagement and ROI, as users feel the website is speaking directly to them.

Dynamic split testing does add complexity, but when managed well, the payoff is higher conversion rates and more satisfied customers.

In 2026, split testing is just one piece of a much larger puzzle. Leading businesses combine split testing with UX research, behavioural analytics, and multichannel experiments. The goal is continuous, data-driven improvement across every digital touchpoint.

A holistic approach means involving marketing, product, and design teams in the experimentation process. Regular testing cycles ensure you are always learning, adapting, and iterating for better results.

Integrating split testing with broader CRO strategies supports a culture of experimentation, where every idea is validated by real user data before rollout.

Data privacy is becoming a top priority for both users and regulators. As cookies fade out and privacy-first analytics take centre stage, split testing methods must adapt. Server-side testing and compliant analytics tools will be vital for maintaining accurate results while respecting user consent.

Transparent data handling and clear opt-in processes are now essential parts of any split testing programme. Marketers will need to stay informed about regulations and invest in technology that keeps tests reliable and legal.

Embracing privacy-friendly split testing ensures you can keep optimising without risking user trust or falling foul of new laws.

If you’re reading this and thinking “That all sounds great, but where do I actually start to see real results?”—you’re not alone. Split testing can feel overwhelming, especially with all the tools and data out there. The good news is, you don’t have to figure it all out on your own. I help small businesses like yours turn experiments into more leads and sales, not just nice reports. If you want some honest advice tailored to your website or campaigns, grab your Get free 45 min consultation and let’s make your next test one that actually makes you money.

In 2026, digital marketers and business owners face more competition than ever. Data-driven decision-making is now essential for anyone who wants to stay ahead and grow their business.

Mastering split testing gives you a powerful edge. It lets you compare versions of your site, ads, or user journeys, so you can stop guessing and start making changes that actually increase conversions, save budget, and create better customer experiences.

This guide shows you exactly how to master split testing in 2026. We’ll cover the fundamentals, explain the difference from A/B testing, reveal the business value, walk through the step-by-step process with examples, dive into statistical models, help you choose the right tools, and explore future trends.

Split testing is the backbone of data-driven marketing. If you've ever wondered why some websites seem to convert visitors into customers effortlessly, split testing is usually the secret sauce behind the scenes.

Split testing is a controlled experiment where you randomly show users different versions of a web page, ad, or email to see which performs best. You might hear terms like split testing, A/B testing, or multivariate testing used together, but they have subtle differences.

A/B testing usually compares just two versions, A and B, while split testing can include more variations or even radically different designs. Multivariate testing goes further, testing combinations of several elements at once.

Picture a chocolate ecommerce site testing two banner offers: one with "Free Delivery Over £20" and another with "10% Off First Order." Each visitor sees only one version, unaware they're part of a test. The original is called the control, while the new version is the variation.

Common use cases include landing pages, checkout flows, email campaigns, and ad creatives. Split testing is foundational to conversion rate optimisation strategies, helping you move from guesswork to growth.

You might hear marketers use split testing and A/B testing as if they mean the same thing. Technically, A/B testing is about comparing just two versions: A and B. Split testing focuses on splitting your traffic between multiple versions, whether that's two, three, or more.

Multivariate testing is a step up, letting you test multiple elements—like headline, image, and button text—at the same time. According to Contentsquare, split testing often means comparing completely different layouts, not just small tweaks.

So, when should you use each? Use A/B testing for quick, focused changes, like CTA button colour. Use split testing if you're evaluating big design overhauls. Multivariate testing helps when you want to understand how different elements interact. All of them aim to find the variant that drives the most conversions.

Relying on gut instinct or “best practices” often leads to missed opportunities. Split testing lets you make decisions based on real user behaviour, not the HiPPO (Highest Paid Person’s Opinion) or industry trends that may not fit your audience.

Businesses see measurable gains: higher conversion rates, increased revenue, and lower bounce rates. Take Ben.nl, who boosted conversions by 17.63% just by adjusting where the colour palette appeared on product pages. Even seasoned marketers get it wrong sometimes—split testing lets your audience “vote” with their actions.

In today’s competitive digital landscape, split testing is a proven driver of business growth. It’s how leaders stay ahead.

Think split testing is only for big brands or high-traffic sites? Think again. Any business, regardless of size, can benefit. Another myth: it’s about throwing random ideas at the wall. In reality, effective split testing starts with evidence-based hypotheses and a clear plan.

Some believe split testing is the entire CRO process. Not true—it’s a key tool, but real optimisation involves research, analysis, and ongoing iteration. As Contentsquare points out, split testing is about validating ideas, not just discovering them.

Bottom line: split testing is scalable, structured, and universally applicable across industries.

Companies that embrace split testing consistently report better conversion rates and higher revenue. Small changes, such as tweaking a CTA colour or image placement, can lead to impressive results.

Industry benchmarks from platforms like VWO and Contentsquare show that split testing works for ecommerce, SaaS, B2B, and service businesses alike. It’s a staple for anyone serious about digital growth.

Getting your split testing process right before you start is where the real magic happens. A little planning upfront saves you wasted time and delivers results that move the needle.

Start by pinpointing where split testing will have the greatest impact. Focus on high-value pages like product pages, landing pages, and checkout flows. These are the spots where even small tweaks can lead to a surge in conversions.

Look for elements that directly influence user action. This could be headlines, call-to-action buttons, images, forms, testimonials, or even entire layouts. For inspiration on where to start, check out these Landing page optimisation tips.

Use analytics to spot bottlenecks and high-exit pages. Prioritise elements that influence your main metrics, whether that’s sales, leads, or signups. Remember, split testing is most powerful when it targets areas that drive your business goals.

Effective split testing relies on more than guesswork. Use quantitative data from sources like Google Analytics, heatmaps, and funnel analysis to see where users drop off. Pair this with qualitative insights from customer feedback, surveys, or session replays.

Heatmaps, for example, can show you exactly where attention fades or confusion sets in. Funnel analysis pinpoints stages where users abandon ship. By combining numbers with real user stories, you get a complete picture.

The goal is to base every split testing idea on solid data, not assumptions. Identify recurring patterns and friction points, then use these insights to uncover your biggest opportunities for improvement.

A strong split testing hypothesis is the backbone of any successful experiment. Don’t just test random ideas. Instead, create a clear, evidence-based prediction: “If we change X, we expect Y to happen because of Z.”

Use a framework to keep it structured. Rate your hypothesis by potential impact, confidence, and how easy it is to implement. For example, if data shows users ignore your signup button, you might predict that moving it higher will increase clicks.

Avoid the trap of testing for the sake of it. Focus on changes that are likely to shift the needle on your key metrics. Structured hypotheses ensure your split testing isn’t just busywork, but a strategic lever for growth.

With a list of hypotheses in hand, it’s time to prioritise. Score each idea using frameworks like ICE (Impact, Confidence, Ease) or PIE (Potential, Importance, Ease). This helps you work smarter, not harder.

Start with “quick wins” that are simple to implement and likely to show results fast. Plan to test one variable at a time so you can be confident about what caused any change.

Make sure your split testing roadmap is always tied back to business priorities. For example, testing a checkout tweak usually beats fiddling with the footer. Iterative, focused testing builds momentum and delivers compounding gains over time.

Running a successful split testing experiment in 2026 means following a clear, evidence-based process. Each step builds on the last, ensuring you move from strategy to execution with confidence. Let’s break down exactly how to do it.

Begin every split testing journey with deep research. Gather baseline data using analytics tools like Google Analytics, heatmaps, and funnel analysis. Pinpoint where users drop off or fail to convert. For example, Contentsquare’s funnel analysis can reveal friction points in your checkout process.

Pair this quantitative data with qualitative insights. Use customer polls, surveys, or session replays to hear the voice of your users. Look for recurring complaints or questions—these are goldmines for improvement ideas.

Set a clear objective for your test. For instance, aim to increase signups by 10 percent or reduce cart abandonment by 15 percent. This focus ensures your split testing efforts drive business value.

Turn your research into actionable hypotheses. In split testing, a hypothesis is a prediction, grounded in data, about how a change will impact user behaviour. Use a framework like: “If we change X, we expect Y because of Z.”

Back up your ideas with real evidence—don’t guess. For example, if analytics show users miss your CTA below the fold, your hypothesis might be: “Moving the CTA higher will boost clicks because more users will see it.”

Align each hypothesis with a business KPI, such as conversions or average order value. Prioritise ideas that are likely to move the needle in your split testing programme.

With your hypothesis in hand, design your test variations. In split testing, the control is your current version, while the variation is the new one you want to try. Use visual editors or work with developers for more complex changes.

Change only one variable at a time for clarity. For example:

Test both small tweaks and bold redesigns, but always maintain a consistent user experience. Make sure your variations align with your brand and don’t disrupt user trust.

Now, set up your split testing experiment in your chosen platform—VWO, Contentsquare, or Optimizely are popular options. Decide how to split your traffic, usually 50/50 between control and variation.

Configure your test parameters:

Ensure randomisation so users only see one version. Keep documentation of test settings for transparency. For a deep dive on setup and best practices, see Split Test Best Practices.

Once live, monitor your split testing experiment closely. Watch for technical issues, broken links, or slow load times. Let the test run its full course—don’t stop early, or you risk unreliable results.

Check in periodically to ensure there are no anomalies, but avoid making changes mid-test. VWO recommends at least a two-week run, even for high-traffic sites.

Record interim data, but wait for the test to finish before deciding on a winner. Patience pays off in split testing, ensuring your conclusions are sound.

Once your split testing period ends, dive into the data. Use your tool’s reporting dashboard to compare conversion rates, click-throughs, or revenue between control and variation.

Assess whether the difference is statistically significant. Did the new version deliver a real uplift, or was it just random chance? For example, Ben.nl’s layout tweak led to a validated 17.63 percent conversion increase.

Document all findings, even if the test “failed.” Every split testing experiment offers valuable insights that inform your next move.

If your variation wins, roll it out to all users and watch post-launch metrics. Confirm the improvement is sustained in real-world use.

Use what you’ve learned to fuel your next round of split testing. Build a roadmap of future experiments, targeting new bottlenecks or opportunities.

Share results with your team to foster a culture of learning. Remember, continuous optimisation through split testing leads to lasting gains.

Even experienced marketers can trip up in split testing. Avoid these classic mistakes:

Always QA each variation thoroughly before launch. Use audience segmentation to focus on the right users. Embrace every result as a learning opportunity.

Split testing is not a one-off project—it’s an ongoing process that, when done right, can transform your digital performance.

Understanding statistical models is crucial if you want to run split testing that delivers real, actionable results. The models you choose will shape how you interpret your data, how quickly you reach conclusions, and how confident you can be in your findings. Let’s break down the essentials.

When running split testing, you’ll often hear about Frequentist and Bayesian statistics. The Frequentist model is the traditional choice, relying on event frequency in large samples to draw conclusions. This means you need a lot of data before making decisions. On the other hand, Bayesian methods use probability and prior results, offering insights faster, especially for sites with less traffic.

Here’s a quick comparison:

| Model | Pros | Cons |

|---|---|---|

| Frequentist | Robust, well-known, widely accepted | Slower, needs more data |

| Bayesian | Faster, works with smaller samples | Needs careful prior selection |

Some recent research, like the Learning Across Experiments and Time: Tackling Heterogeneity in A/B Testing paper, explores how new frameworks can make split testing more reliable, even with practical constraints. Ultimately, your choice depends on your traffic, timeline, and risk tolerance.

Statistical significance is the bedrock of trustworthy split testing. It tells you whether your results are likely to be real or just due to chance. Most marketers aim for a 95% confidence level, meaning there’s only a 5% probability the results happened randomly.

To ensure significance:

If you stop a split testing experiment too early, you risk making decisions on incomplete data. Patience is rewarded here, as only properly run tests will give you the confidence to act.

Once your split testing experiment is complete, it’s time to dig into the numbers. Start by comparing key metrics like conversion rates, click-throughs, and revenue per visitor. Look for clear winners, but also check if the uplift is meaningful for your business goals.

Document both successful and unsuccessful outcomes. For example, a layout tweak that increases conversions by 17% is worth rolling out, but even a flat result provides valuable learning. Present findings in a way that non-technical stakeholders can understand, using charts or simple tables.

Every split testing result, positive or negative, should feed into your ongoing optimisation roadmap.

Not every split testing experiment will produce a clear winner. Sometimes results are inconclusive or even negative. That’s not a failure—it’s part of building a knowledge base. Use these outcomes to refine your hypotheses and improve future experiments.

Create a log of all tests, noting what was tried, what worked, and what didn’t. This habit encourages a culture of experimentation and learning across your team. Remember, continuous split testing—even when the result is “no impact”—drives incremental gains over time.

The best marketers treat every test as a stepping stone towards better business performance.

Choosing the right split testing tools is critical for any marketer or business aiming to boost conversions in 2026. With the sheer variety of platforms and features on offer, knowing what to prioritise can save you time, money, and headaches.

When comparing split testing platforms, focus on features that make experimentation efficient and insightful. Look for intuitive visual editors that allow you to set up tests without heavy developer involvement. The ability to run multiple experiments in parallel on isolated audiences is a must for scaling your optimisation efforts.

Seamless integration with analytics platforms, heatmaps, and session replay tools gives you a complete view of user behaviour. Fast and accurate reporting dashboards help you make decisions quickly. AI-driven insights, like those discussed in AI website optimization insights, are becoming standard, making it easier to spot winning variations and automate routine tasks.

Choose tools that fit your team’s skillset and business goals. Prioritise platforms that support mobile and desktop testing, and provide robust segmentation options.

The split testing market is evolving rapidly. Leading platforms such as VWO, Contentsquare, Optimizely, Google Optimize (if still available), and Convert all offer robust testing features, but they differ in pricing, usability, and support.

Here’s a quick comparison:

| Platform | Visual Editor | AI Features | Multivariate | Mobile Support | Free Trial |

|---|---|---|---|---|---|

| VWO | Yes | Yes | Yes | Yes | Yes |

| Contentsquare | Yes | Yes | Yes | Yes | No |

| Optimizely | Yes | Yes | Yes | Yes | No |

| Google Optimize | Yes | Limited | Yes | Yes | Yes |

| Convert | Yes | Limited | Yes | Yes | Yes |

According to the Split Testing Software Market Size, Growth, Share, & Analysis Report – 2033, demand for AI-powered and privacy-friendly split testing tools is set to surge. Consider factors like ease of setup, customer support, and advanced targeting when making your choice.

Getting the most from your split testing tool means setting up every experiment with care. Start by ensuring proper tracking and analytics integration, so every user action is captured accurately. Always run thorough QA on all variations, across devices and browsers, before going live.

Train your team on how to use the platform and design effective tests. Use clear documentation and consistent naming conventions to avoid confusion. Monitor tests for technical glitches and review your testing processes regularly to adapt to new business needs.

A well-structured approach keeps your split testing experiments reliable and your results actionable.

For ecommerce brands, especially those on Shopify, split testing is a game-changer. Marketing XP specialises in designing and running split testing experiments that focus on real-world impact, not just theory.

By combining CRO best practices, analytics, and AI-powered insights, Marketing XP helps businesses optimise product pages, checkout flows, and user journeys for maximum conversion. Their hands-on, practical approach ensures every test drives measurable results.

Business owners can also access training and coaching to run their own split testing campaigns, leveraging AI tools for ongoing improvement. If you want specialist support and proven strategies for Shopify split testing, Marketing XP is ready to help you make every visitor count.

The world of split testing is evolving at breakneck speed. As we look to 2026, digital marketers and business owners will need to adapt to new technologies and shifting user expectations. Mastering these innovations will ensure your split testing strategy remains ahead of the curve.

AI is reshaping how split testing is done. Modern platforms now offer automated test creation, predictive analytics, and real-time data analysis. With tools like Contentsquare’s Sense Analyst, marketers can spot high-impact opportunities with minimal manual effort.

Automated segmentation enables you to run targeted experiments for specific user groups. AI-driven insights help you refine your split testing hypotheses and interpret results more quickly. For those interested in leveraging advanced analytics, Data analytics for marketers offers practical guidance on using AI and data to inform every test.

The result? Marketers spend less time on number crunching and more time crafting winning strategies.

Split testing is no longer just about static A/B comparisons. The future lies in hyper-personalised, dynamic testing that adapts in real time to each user. Platforms can now serve multiple variations based on user behaviour, location, or device.

Imagine split testing product recommendations or messaging tailored to returning customers versus first-time visitors. This level of personalisation increases engagement and ROI, as users feel the website is speaking directly to them.

Dynamic split testing does add complexity, but when managed well, the payoff is higher conversion rates and more satisfied customers.

In 2026, split testing is just one piece of a much larger puzzle. Leading businesses combine split testing with UX research, behavioural analytics, and multichannel experiments. The goal is continuous, data-driven improvement across every digital touchpoint.

A holistic approach means involving marketing, product, and design teams in the experimentation process. Regular testing cycles ensure you are always learning, adapting, and iterating for better results.

Integrating split testing with broader CRO strategies supports a culture of experimentation, where every idea is validated by real user data before rollout.

Data privacy is becoming a top priority for both users and regulators. As cookies fade out and privacy-first analytics take centre stage, split testing methods must adapt. Server-side testing and compliant analytics tools will be vital for maintaining accurate results while respecting user consent.

Transparent data handling and clear opt-in processes are now essential parts of any split testing programme. Marketers will need to stay informed about regulations and invest in technology that keeps tests reliable and legal.

Embracing privacy-friendly split testing ensures you can keep optimising without risking user trust or falling foul of new laws.

If you’re reading this and thinking “That all sounds great, but where do I actually start to see real results?”—you’re not alone. Split testing can feel overwhelming, especially with all the tools and data out there. The good news is, you don’t have to figure it all out on your own. I help small businesses like yours turn experiments into more leads and sales, not just nice reports. If you want some honest advice tailored to your website or campaigns, grab your Get free 45 min consultation and let’s make your next test one that actually makes you money.